Ray-Ban Meta glasses have demonstrated the power of giving people hands-free access to key parts of their digital lives from their physical ones. We can talk to a smart AI assistant, connect with friends, and capture the moments that matter—all without ever having to pull out a phone. These stylish glasses fit seamlessly into our everyday lives, and people absolutely love them.

Yet while Ray-Ban Meta opened up an entirely new category of display-less glasses super-charged by AI, the XR industry has long dreamt of true AR glasses—a product that combines the benefits of a large holographic display and personalized AI assistance in a comfortable, stylish, all-day wearable form factor.

And today, we’ve pushed that dream closer to reality with the unveiling of Orion, which we believe is the most advanced pair of AR glasses ever made. In fact, it might be the most challenging consumer electronics device produced since the smartphone. Orion is the result of breakthrough inventions in virtually every field of modern computing—building on the work we’ve been doing at Reality Labs for the last decade. It’s packed with entirely new technologies, including the most advanced AR display ever assembled and custom silicon that enables powerful AR experiences to run on a pair of glasses using a fraction of the power and weight of an MR headset.

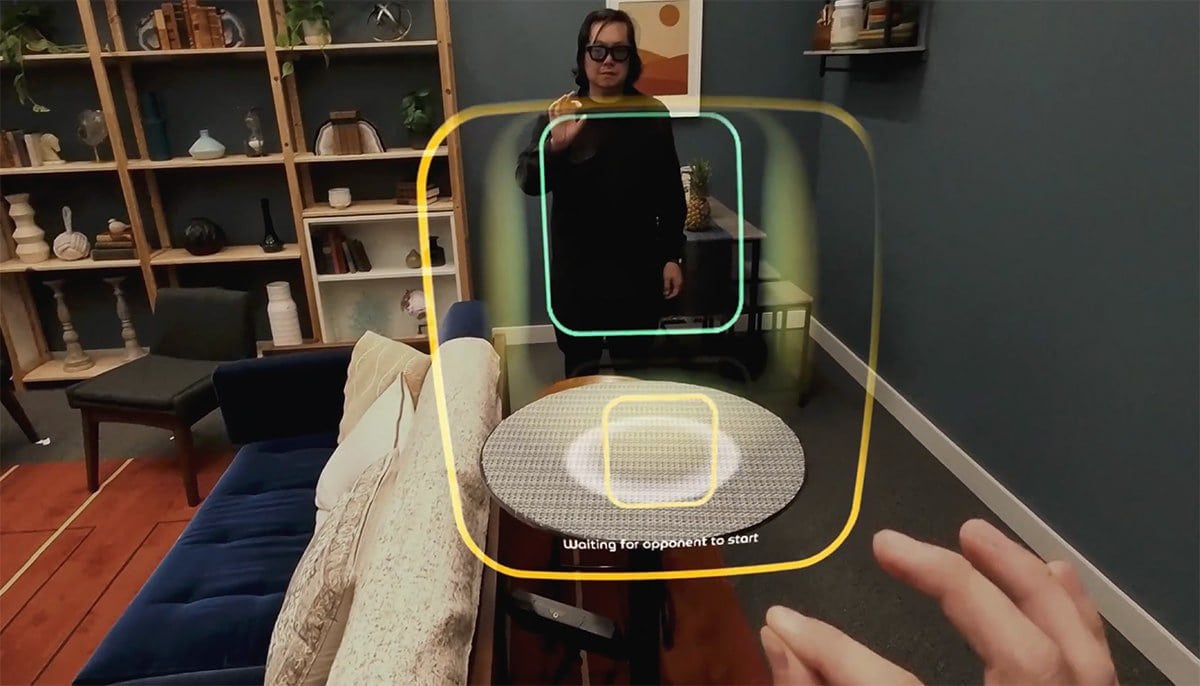

Orion’s input system seamlessly combines voice, eye gaze, and hand tracking with an EMG wristband that lets you swipe, click, and scroll while keeping your arm resting comfortably by your side, letting you stay present in the world and with the people around you as you interact with rich digital content.

Beginning today at Connect and continuing throughout the year, we’re opening up access to our Orion product prototype for Meta employees and select, external audiences so our development team can learn, iterate, and build towards our consumer AR glasses product line, which we plan to begin shipping in the near future.

Why AR Glasses?

There are three primary reasons why AR glasses are key to unlocking the next great leap in human-oriented computing.

They enable digital experiences that are unconstrained by the limits of a smartphone screen. With large holographic displays, you can use the physical world as your canvas, placing 2D and 3D content and experiences anywhere you want.

They seamlessly integrate contextual AI that can sense and understand the world around you in order to anticipate and proactively address your needs.

They’re lightweight and great for both indoor and outdoor use—and they let people see each other’s real face, real eyes, and real expressions.

That’s the north star our industry has been building towards: a product combining the convenience and immediacy of wearables with a large display, high-bandwidth input, and contextualized AI in a form factor that people feel comfortable wearing in their daily lives.

Compact Form Factor, Compounded Challenges

For years, we’ve faced a false choice—either virtual and mixed reality headsets that enable deep, immersive experiences in a bulky form factor or glasses that are ideal for all-day use but don’t offer rich visual apps and experiences given the lack of a large display and corresponding compute power.

But we want it all, without compromises. For years, we’ve been hard at work to take the incredible spatial experiences afforded by VR and MR headsets and miniaturizing the technology necessary to deliver those experiences into a pair of lightweight, stylish glasses. Nailing the form factor, delivering holographic displays, developing compelling AR experiences, and creating new human-computer interaction (HCI) paradigms—and doing it all in one cohesive product—is one of the most difficult challenges our industry has ever faced. It was so challenging that we thought we had less than a 10 percent chance of pulling it off successfully.

Until now.

A Groundbreaking Display in an Unparalleled Form Factor

At approximately 70 degrees, Orion has the largest field of view in the smallest AR glasses form factor to date. That field of view unlocks truly immersive use cases for Orion, from multitasking windows and big-screen entertainment to lifesize holograms of people—all digital content that can seamlessly blend with your view of the physical world.

For Orion, field of view was our holy grail. We were bumping up against the laws of physics and had to bend beams of light in ways they don’t naturally bend—and we had to do it in a power envelope measured in milliwatts.

Instead of glass, we made the lenses from silicon carbide—a novel application for AR glasses. Silicon carbide is incredibly lightweight, it doesn’t result in optical artifacts or stray light, and it has a high refractive index—all optical properties that are key to a large field of view. The waveguides themselves have really intricate and complex nano-scale 3D structures to diffract or spread light in the ways necessary to achieve this field of view. And the projectors are uLEDs—a new type of display technology that’s super small and extremely power efficient.

Orion is unmistakably a pair of glasses in both look and feel—complete with transparent lenses. Unlike MR headsets or other AR glasses today, you can still see each other’s real eyes and expressions, so you can be present and share the experience with the people around you. Dozens of innovations were required to get the industrial design down to a contemporary glasses form factor that you’d be comfortable wearing every day. Orion is a feat of miniaturization—the components are packed down to a fraction of a millimeter. And we managed to embed seven tiny cameras and sensors in the frame rims.

We had to maintain optical precision at one-tenth the thickness of a strand of human hair. And the system can detect tiny amounts of movement—like the frames expanding or contracting in various room temperatures—and then digitally correct for the necessary optical alignment, all within milliseconds. We made the frames out of magnesium—the same material used in F1 race cars and spacecraft—because it’s lightweight yet rigid, so it keeps the optical elements in alignment and efficiently conducts away heat.

Heating and Cooling

Once we cracked the display (no pun intended) and overcame the physics problems, we had to navigate the challenge of really powerful compute alongside really low power consumption and the need for heat dissipation. Unlike today’s MR headsets, you can’t stuff a fan into a pair of glasses. So we had to get creative. A lot of the materials used to cool Orion are similar to those used by NASA to cool satellites in outer space.

We built highly specialized custom silicon that’s extremely power efficient and optimized for our AI, machine perception, and graphics algorithms. We built multiple custom chips and dozens of highly custom silicon IP blocks inside those chips. That lets us take the algorithms necessary for hand and eye tracking as well as simultaneous localization and mapping (SLAM) technology that normally takes hundreds of milliwatts of power—and thus generates a corresponding amount of heat—and shrink it down to just a few dozen milliwatts.

And yes, custom silicon continues to play a critical role in product development at Reality Labs, despite what you may have read elsewhere.

Effortless EMG

Every new computing platform brings with it a paradigm shift in the way we interact with our devices. The invention of the mouse helped pave the way for the graphical user interfaces (GUIs) that dominate our world today, and smartphones didn’t start to really gain traction until the advent of the touch screen. And the same rule holds true for wearables.

We’ve spoken about our work with electromyography, or EMG, for years, driven by our belief that input for AR glasses needs to be fast, convenient, reliable, subtle, and socially acceptable. And now, that work is ready for prime time.

Orion’s input and interaction system seamlessly combines voice, eye gaze, and hand tracking with an EMG wristband that lets you swipe, click, and scroll with ease.

It works—and feels—like magic. Imagine taking a photo on your morning jog with a simple tap of your fingertips or navigating menus with barely perceptible movements of your hands. Our wristband combines a high-performance textile with embedded EMG sensors to detect the electrical signals generated by even the tiniest muscle movements. An on-device ML processor then interprets those EMG signals to produce input events that are transmitted wirelessly to the glasses. The system adapts to you, so it gets better and more capable at recognizing the most subtle gestures over time. And today, we’re sharing more about our support for external research focused on expanding the equity and accessibility potential of EMG wristbands.

Meet the Wireless Compute Puck

True AR glasses must be wireless, and they must also be small.So we built a wireless compute puck for Orion. It takes some of the load off of the glasses, enabling longer battery life and a better form factor complete with low latency.

The glasses run all of the hand tracking, eye tracking, SLAM, and specialized AR world locking graphics algorithms while the app logic runs on the puck to keep the glasses as lightweight and compact as possible.

The puck has dual processors, including one custom designed right here at Meta, and it provides the compute power necessary for low-latency graphics rendering, AI, and some additional machine perception.

And since it’s small and sleek, you can comfortably toss the puck in a bag or your pocket and go about your business—no strings attached.

AR Experiences

Of course, as with any piece of hardware, it’s only as good as the things you can do with it. And while it’s still early days, the experiences afforded by Orion are an exciting glimpse of what’s to come.

We’ve got our smart assistant, Meta AI, running on Orion. It understands what you’re looking at in the physical world and can help you with useful visualizations. Orion uses the same Llama model that powers AI experiences running on Ray-Ban Meta smart glasses today, plus custom research models to demonstrate potential use cases for future wearables development.

You can take a hands-free video call on the go to catch up with friends and family in real time, and you can stay connected on WhatsApp and Messenger to view and send messages. No need to pull out your phone, unlock it, find the right app, and let your friend know you’re running late for dinner—you can do it all through your glasses.

You can play shared AR games with family on the other side of the country or with your friend on the other side of the couch. And Orion’s large display lets you multitask with multiple windows to get stuff done without having to lug around your laptop.

The experiences available on Orion today will help chart the roadmap for our consumer AR glasses line in the future. Our teams will continue to iterate and build new immersive social experiences, alongside our developer partners, and we can’t wait to share what comes next.

A Purposeful Product Prototype

While Orion won’t make its way into the hands of consumers, make no mistake: This is not a research prototype. It’s the most polished product prototype we’ve ever developed—and it’s truly representative of something that could ship to consumers. Rather than rushing to put it on shelves, we decided to focus on internal development first, which means we can keep building quickly and continue to push the boundaries of the technology and experiences.

And that means we’ll arrive at an even better consumer product faster.

What Comes Next

Two major obstacles have long stood in the way of mainstream consumer-grade AR glasses: the technological breakthroughs necessary to deliver a large display in a compact glasses form factor and the necessity of useful and compelling AR experiences that can run on those glasses. Orion is a huge milestone, delivering true AR experiences running on reasonably stylish hardware for the very first time.

Now that we’ve shared Orion with the world, we’re focused on a few things:

Tuning the AR display quality to make the visuals even sharper

Optimizing wherever we can to make the form factor even smaller

Building at scale to make them more affordable

In the next few years, you can expect to see new devices from us that build on our R&D efforts. And a number of Orion’s innovations have been extended to our current consumer products today as well as our future product roadmap. We’ve optimized some of our spatial perception algorithms, which are running on both Meta Quest 3S and Orion. While the eye gaze and subtle gestural input system was originally designed for Orion, we plan to use it in future products. And we’re exploring the use of EMG wristbands across future consumer products as well.

Orion isn’t just a window into the future—it’s a look at the very real possibilities within reach today. We built it in our pursuit of what we do best: helping people connect. From Ray-Ban Meta glasses to Orion, we’ve seen the good that can come from letting people stay more present and empowered in the physical world, even while tapping into all the added richness the digital world has to offer.

We believe you shouldn’t have to choose between the two. And with the next computing platform, you won’t have to.